The Mathematics of Bluffing

A quick post about poker! That seemingly simple, deceptively complex game with a number of interesting parallels to investing. I just watched the MIT lectures on ‘Poker Theory and Analytics,’ an ‘Independent Activities Period’ mini-course, and for our mutual amusement, I worked through the math on bluffing, which is an interesting problem I had never done the full deep dive into. Here it is, including a Mathematica notebook. Here’s how we set up a pure bluffing scenario:

- There is a $1 pot.

- There are 2 players. Player 1 flips a coin.

- Player 1 looks at the coin, which represents his ‘hand.’ Player 2 does not see the coin.

- If it’s heads, Player 1 has ‘the nuts,’ the winning ‘hand.’

- If it’s tails, Player 1 has the worst possible ‘hand’, loses to whatever Player 2 has.

- Player 1 gets the option to bet $1, or check.

- If Player 1 bets, Player 2 can call the $1 bet, or fold. If Player 2 folds, Player 1 wins the pot without ‘showing down’ the coin. If Player 2 calls, the coin is revealed and best hand wins the pot (heads: Player 1, tails: Player 2).

- If Player 1 checks, the coin is revealed and best hand wins the pot.

This maps pretty well to a pure bluffing scenario on the river. You either have the nuts 50% of the time, or the worst possible hand the other 50%. This only covers whether Player 1 should bluff and whether Player 2 should then call. Player 2 doesn’t have the option to bet if you check, raise if you bet, and the bluff amount is fixed.

How should Player 1 play?

- If Player 1 has the nuts, he (or she) should always bet for value. Why? Betting is always at least as good or better than checking:

- If Player 2 folds, Player 1 wins the $1 pot (same as Player 1 checking first).

- If Player 2 calls, Player 1 wins $2, the pot plus the called bet, better than checking first. Betting always has an expected value (EV) >= checking.1

- If Player 2 has no hand, it gets more interesting!_

Suppose Player 1 bluffs when coin comes up tails:

_ </p>- If Player 2 then calls, Player 1 loses the $1 bet. EV: -1.

- If Player 2 folds, Player 1 wins the $1 pot. EV: +1.

_Suppose Player 1 checks when coin comes up tails:

_- Player 2 checks and Player 2 wins the pot. EV: 0. (Only 1 outcome since we’re not letting Player 2 bet.)

When Player 1 has nothing, neither strategy dominates.

Here is a ‘pure strategy’ matrix.

|

P2 tight Fold to any bet |

P2 loose Call any bet |

|

|

P1 passive Value bet heads, check tails |

P1 EV: 0.5 P2 EV: 0.5 |

P1 EV: 1 P2 EV: 0 |

|

P1 aggressive Value bet heads, bluff tails |

P1 EV: 1 P2 EV: 0 |

P1 EV: 0.5 P2 EV: 0.5 |

There is no stable outcome to this game if each player sticks to a single strategy.

If Player 1 is aggressive, it’s better for Player 2 to be loose: he catches all the bluffs for $2, and loses all the value bets for only $1. If Player 2 is loose, it’s better for Player 1 to be passive: he always gets $1 value for betting, and never gets caught bluffing. In each cell, one player is better off moving counterclockwise to the next cell, and they chase each other around the matrix.

In the lingo, there is no pure strategy Nash equilibrium.

Now suppose each player can choose a mixed strategy. Player 1 randomly picks ‘aggressive’ pbluff% of the time. Player 2 randomly picks ‘loose’ pcall% of the time.

Now, if Player 2 calls 50% of the time, Player 1 is indifferent to betting or checking. Calling at random 50% of the time is an unexploitable strategy for Player 2.

Now suppose Player 1 bets 50% of the time he has no hand, and gives up the pot 50% of the time. This strategy presents Player 2 with a ratio of 2 value bets for every bluff.

- 50% of hands Player 1 has the nuts and bets

- 25% of hands Player 1 has no hand and bluffs.

- 25% of hands Player 1 has no hand and folds, giving up the pot.

If Player 2 calls, 1/3 of time he wins $2 ($1 pot + $1 bet), 2/3 of time he loses $1. This has an EV of 0, same as folding. Player 2 is indifferent to calling or folding. Bluffing at random 1/3 of the bets (50% of time the coin is tails) is an unexploitable strategy for Player 1.

If Player 1 always bets for value on heads and bluffs 50% of the time on tails, while Player 2 calls 50% of the time, this is a mixed-strategy Nash equilibrium: neither player can improve by changing the mix of of strategies.

Player 2 breaks even on calls, Player 1 breaks even on bluffs, but wins $1.50 on average each value bet ($1 pot plus additional $1 50% of the time the bet is called). Player 1 gets $0.75 of the overall EV vs. $0.25 for Player 2. By bluffing, Player 1 gets 50% more EV per hand vs. only betting for value. As an exercise, think about what happens to each player’s EV if one of them switches strategies.

So, that’s the picture of the problem and the Nash equilibria. Now let’s solve it more generally and get some more intuition for what the solution and P/L look like for different bet sizes.

Suppose we set up the problem more generally:

P: initial pot =1

S: bet size as fraction of pot

pbluff: probability of bluff

pcall: probability of call

Q: How often should Player 1 bluff?

A: Often enough to make Player 2 indifferent to calling or folding.

EVcall: 1+S when Player 2 calls a bluff. -S when Player 2 calls a value bet.

EVfold: 0

Setting Player 2’s EVcall = EVfold

pbluff (1 +S) _ (1 _ pbluff) S = 0

Solving for pbluff:

![]()

There’s a simpler way of expressing this. Define ratio of bluffs to value bets as

![]() .

.

Then

![]()

In our example, S=1, as bet = pot size; our game-theory optimal bluffing ratio is 1/2; We should bluff half as often as we value bet.

Q: How often should Player 2 call?

A: Often enough to make Player 1 indifferent to checking or bluffing.

EVbluff: P=1 when Player 2 folds. -S when Player 2 calls a bluff bet of S.

EVcheck: 0

EVbluff = (1 _ pcall) _pcall S

EVcheck: 0

Setting Player 1’s EVbluff = EVcheck

(1 _ pcall) _pcall S = 0

Solving for pcall

![]()

Plotting bluff ratio, call probability, and EV as a function of pot size:

Interpretation: When bet size (S) is close to 0 as fraction of pot, it is always worth it for Player 2 to call a small bet to get a chance at a big pot and to make sure Player 1 is honest. As S → 0, calls approach 100%. Best response for player 1 is to never bluff, and they just split the pot. As bet size goes up, it’s more costly for Player 2 to call a big bet to win a small pot and keep Player 1 honest. Therefore bluff frequency goes up as S goes up, and Player 1 gets a higher fraction of the pot. As S → ∞, Player 1’s EV → 1 and Player 2’s EV → 0.

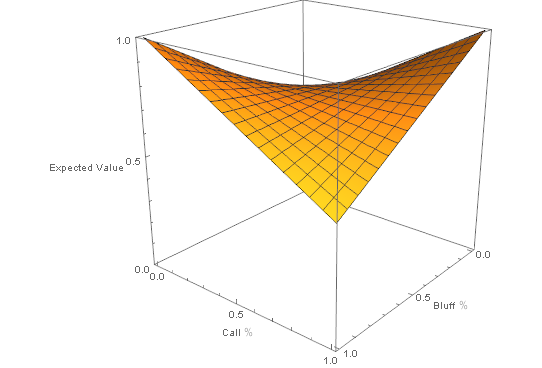

If we do a 3d plot of EV against each player’s strategy with S = 1, we get this:

Here is an interactive version you can explore from various angles by clicking and dragging.

It’s a saddle anchored in 2 upper corners and 2 lower corners at EV=0.5. The four corners represent pure strategies of 100% aggressive/passive/tight/loose. At the Nash equilibrium of bluff ratio = 0.5 and call % = 0.5, the 2 axes along which each player can adjust his strategy form a horizontal plane: neither can unilaterally improve by changing strategy. However, if one player moves away from the Nash equilibrium on the axis representing his strategy, he becomes vulnerable to exploitation by the other: movement along the other player’s strategy axis can improve the other player’s EV.

If we change S from 0 to 1, we see something like this (rotated 90° clockwise from above):

Conclusions: 1) Poker is a pretty deep game (this wasn’t as quick a post as I thought!) and 2) This is a way to get some game theory intuition about one part of the game _ bluffing and calling. If you’re into this sort of thing, I recommend the MIT online poker class mentioned above (this is a deeper dive into part of the last lecture by Matt Hawrilenko), and Mathematics of Poker, by Bill Chen, a math and finance quant at Susquehanna who has won a couple of WSOP bracelets.

This is just an analysis of a simplifed model of one street! And yet, heads-up limit hold’em was recently weakly solved. Computers simulated 2 players playing each other as well as possible for 900 CPU years, iteratively improving complex strategies (terabyte databases of all possible moves and responses) until they were provably so good that no one could have an advantage of more than 1 big blind per 1,000 hands. See if you can beat that near-perfect strategy!

No-limit hold’em with up to 10 players adds giant levels of complexity…No-limit bots are pretty good, but the pros still beat them.

_Note: the first version of this post had a dumb error and some wonky graphs but I think it’s all good now. If you see any mistakes let me know!

_

1 We say that the Player 1 strategy of always betting when the coin comes up heads dominates the strategy of checking: it is sometimes a better decision and always at least as good. If it is always strictly better than an alternative strategy, we say it strictly dominates. In our case Player 1’s strategy of always betting heads sometimes leads to the same outcome as checking (if Player 2 never calls), so we say it weakly dominates the strategy of always checking.